StageDiffusion

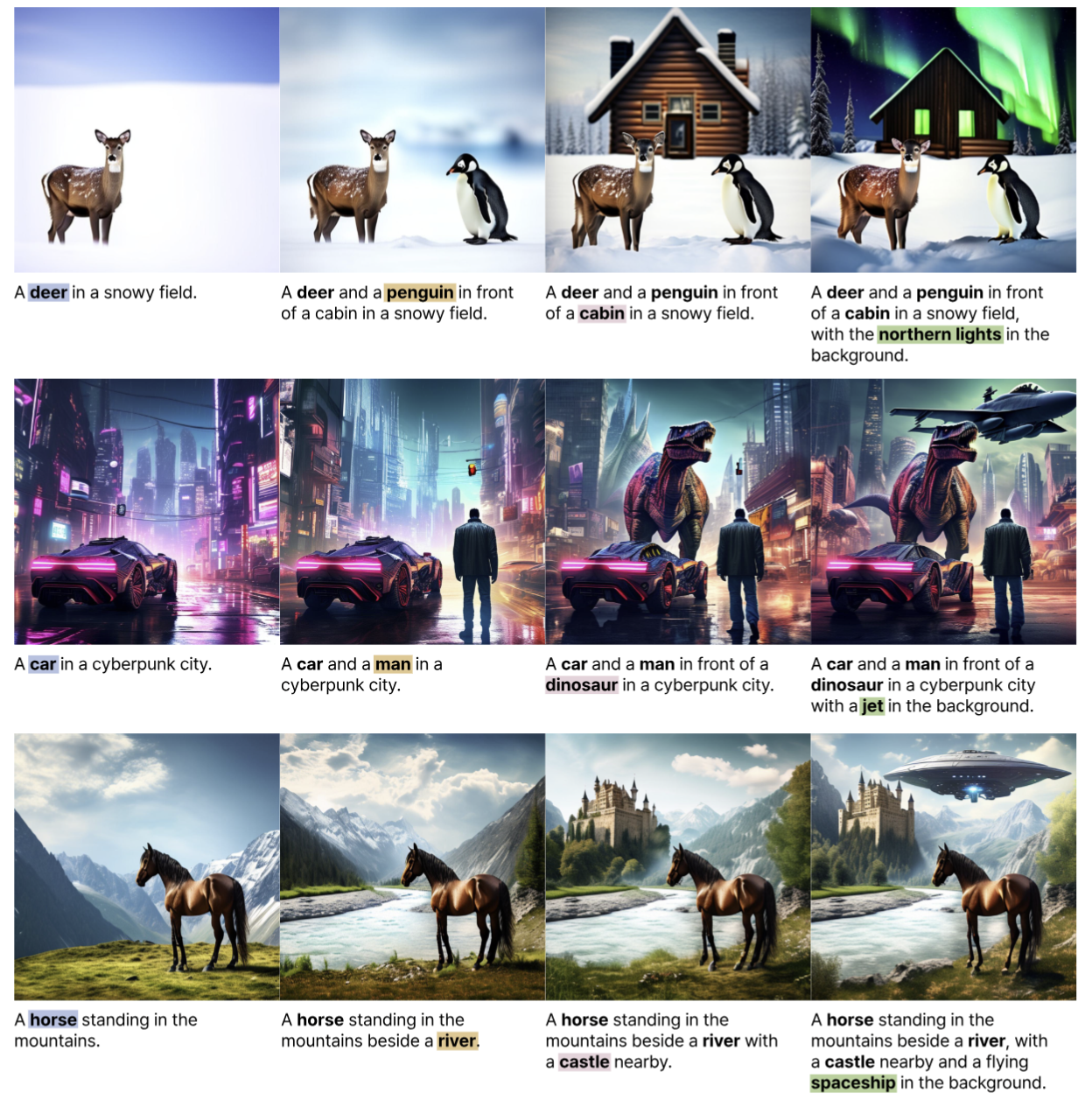

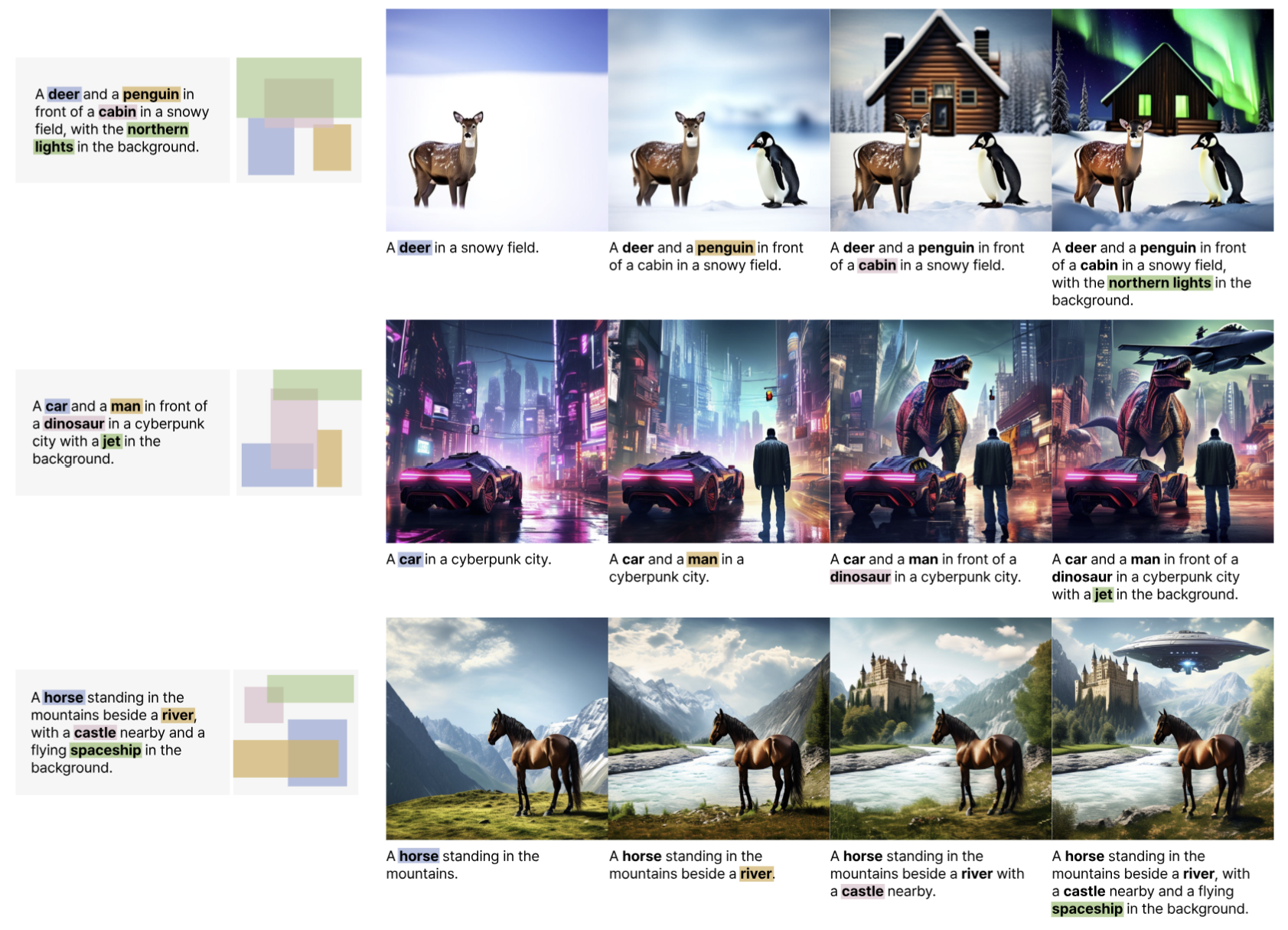

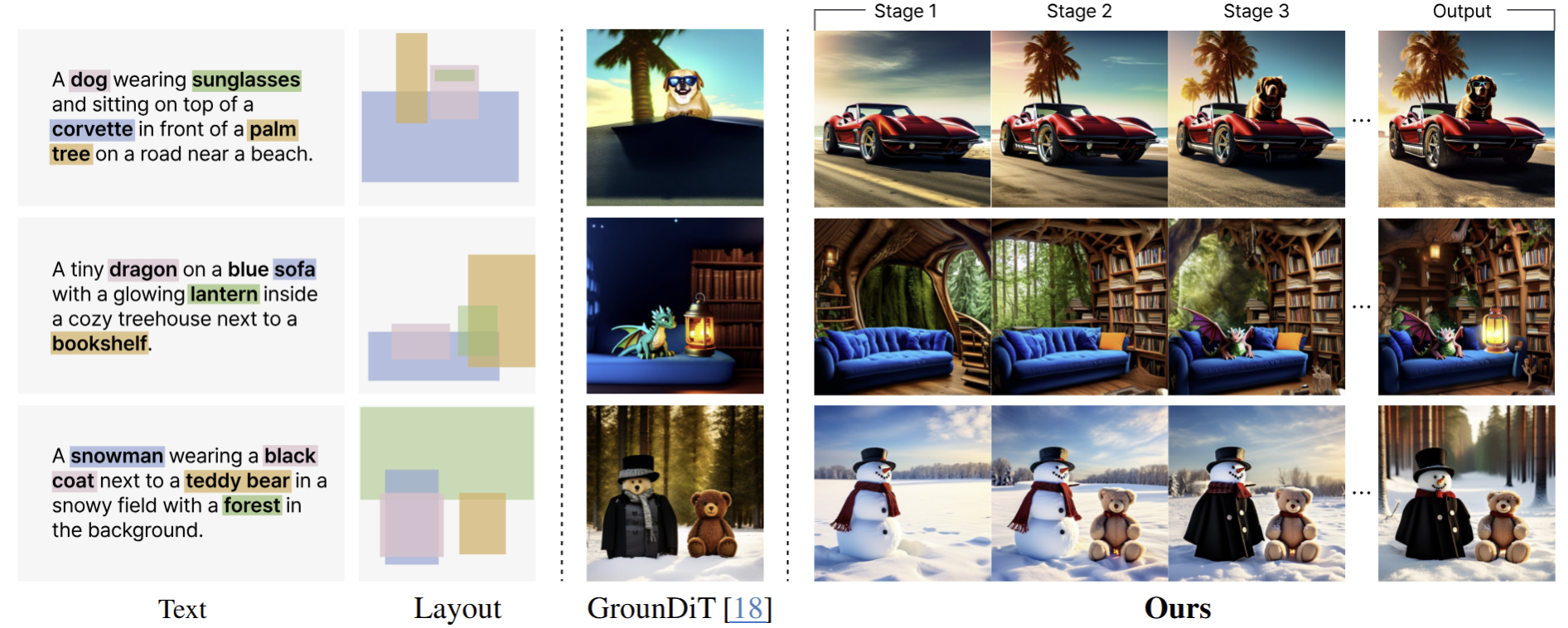

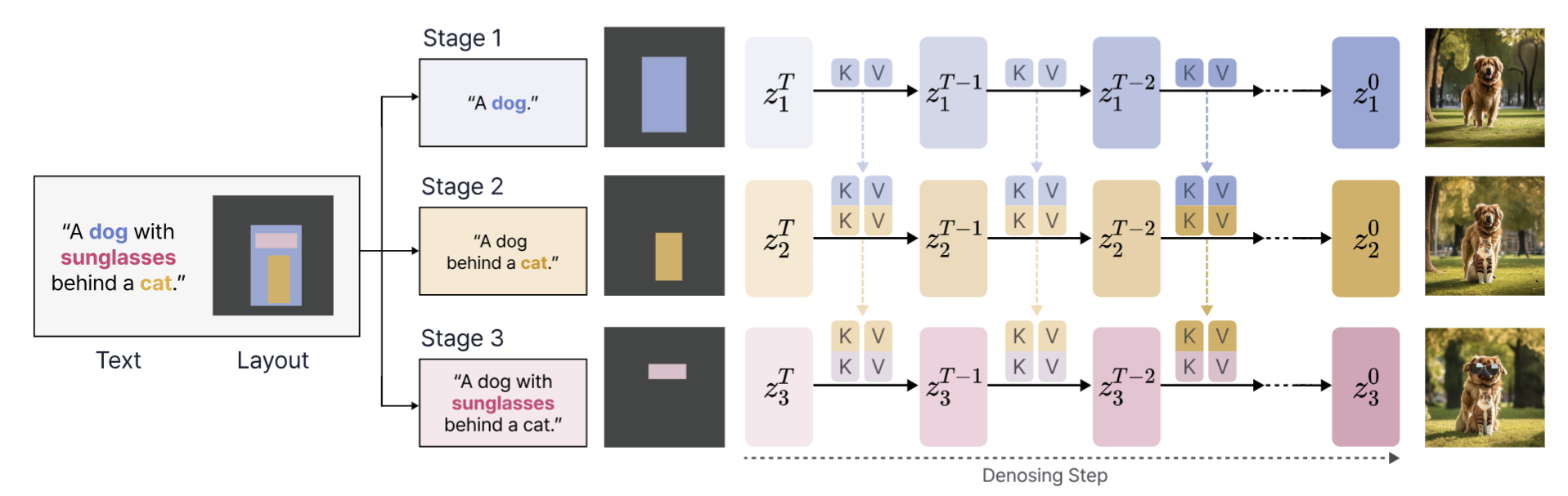

A training-free, stagewise diffusion framework that decomposes complex layouts into simpler sub-scenes for better compositional coherence.

Background and Objectives

Controllable image generation is critical for interactive human-AI co-creation systems, where users require fine-grained spatial control. Existing training-free layout-to-image methods struggle with overlapping regions because they enforce mutually exclusive attention, leading to conflicting optimization signals and degraded compositional coherence.

Methods

We introduced a training-free, stagewise diffusion framework that decomposes complex layouts into simpler sub-scenes. The method progressively grounds objects through joint autoregressive sampling, while earlier stages form a persistent scene scaffold. I also worked with constraint-aware attention mechanisms, balancing current-object emphasis and global scene consistency.

My Role

I empirically analyzed how compositional signals emerge in intermediate representations using attention analysis and linear probing, which helped inform key design decisions in our stage-wise factorization.

Results & Outputs

This work was submitted to CVPR 2026.